|

Morris Alper I am a postdoctoral researcher at Carnegie Mellon University's Language Technologies Institute (LTI). I research creative multimodal machine learning inspired by linguistics — how structures encoded by language provide a tool for interpreting and controlling modalities such as vision and audio, ML as a creativity aid, and innovative methods for low-resource languages. I completed my PhD in multimodal machine learning at Tel Aviv University, where I also received my MSc with honors. My BSc was from MIT, with a dual major in mathematics and linguistics. I was a research scientist intern at Meta in 2024, and have industry experience working as a data scientist and teaching machine learning to industry professionals. Email / CV / Scholar / Twitter / Bluesky / GitHub / LinkedIn / ORCID |

|

Publications |

|

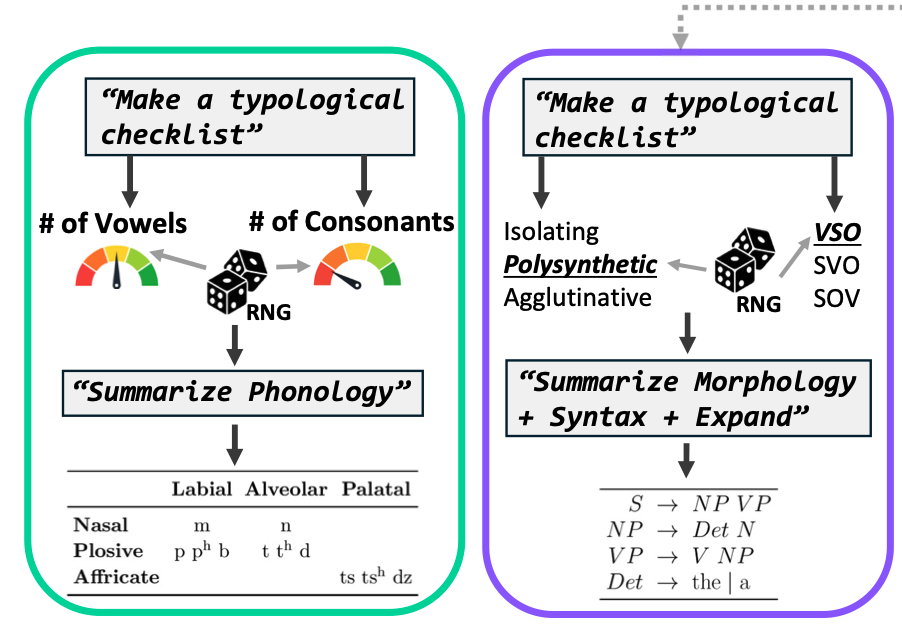

ConlangCrafter: Constructing Languages with a Multi-Hop LLM Pipeline

Morris Alper*, Moran Yanuka*, Raja Giryes, Gašper Beguš *Equal contribution arXiv, 2025 ConlangCrafter leverages LLMs to generate constructed languages through a multi-hop pipeline that decomposes language design into modular stages, with components encouraging consistency and typological diversity. |

|

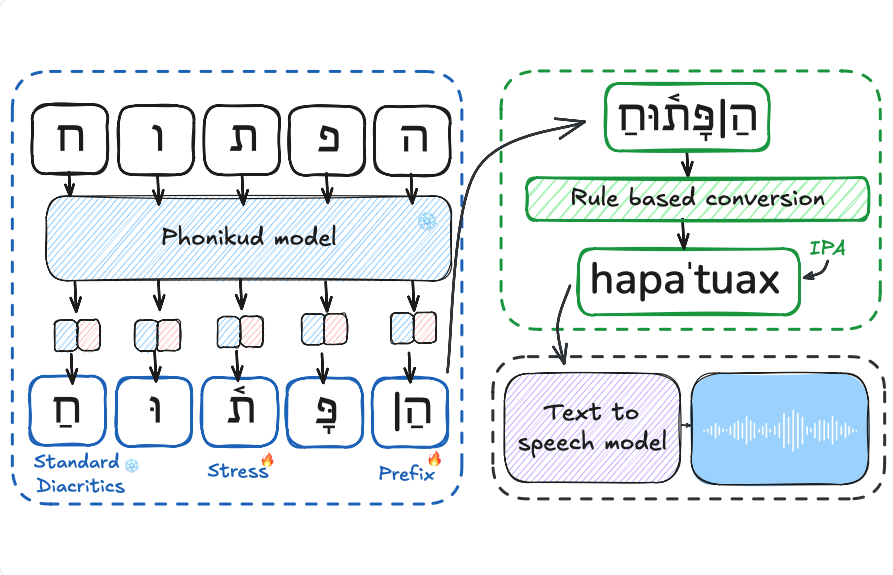

Phonikud: Hebrew Grapheme-to-Phoneme Conversion for Real-Time Text-to-Speech

Yakov Kolani, Maxim Melichov, Cobi Calev, Morris Alper arXiv, 2025 We introduce Phonikud, an open-source grapheme-to-phoneme (G2P) system for Modern Hebrew, and the new ILSpeech dataset and benchmark for Hebrew TTS and G2P. These enable training real-time Hebrew TTS with better phonetic accuracy. |

|

WildCAT3D: Appearance-Aware Multi-View Diffusion in the Wild

Morris Alper, David Novotny, Filippos Kokkinos, Hadar Averbuch-Elor, Tom Monnier NeurIPS, 2025 WildCAT3D generates novel views of scenes learned from diverse 2D scene image data captured in the wild, enabling appearance-controlled novel view synthesis from a single image. |

|

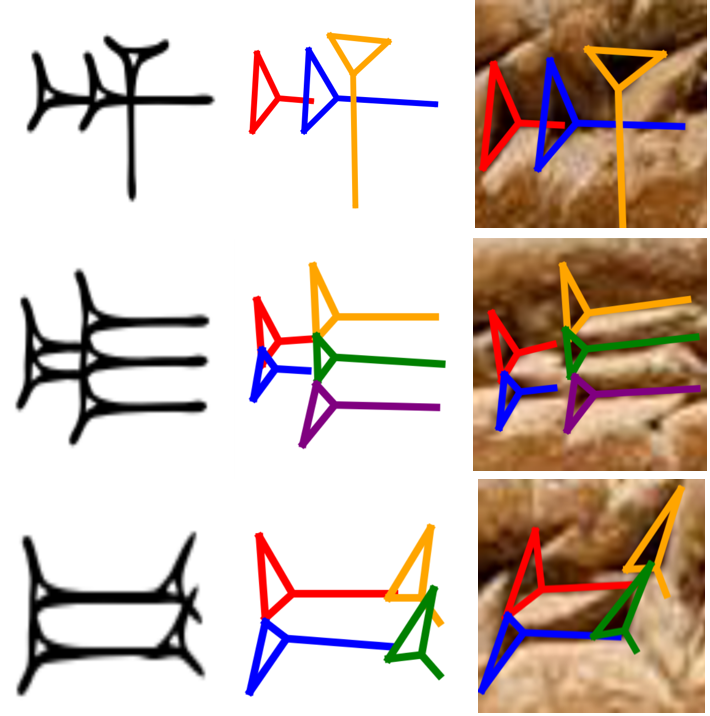

ProtoSnap: Prototype Alignment for Cuneiform Signs

Rachel Mikulinsky*, Morris Alper*, Shai Gordin, Enrique Jimenez, Yoram Cohen, Hadar Averbuch-Elor *Equal contribution ICLR, 2025 We present ProtoSnap, a method for aligning prototype-based skeletons to cuneiform sign scans, and show it has applications for downstream cuneiform OCR. |

|

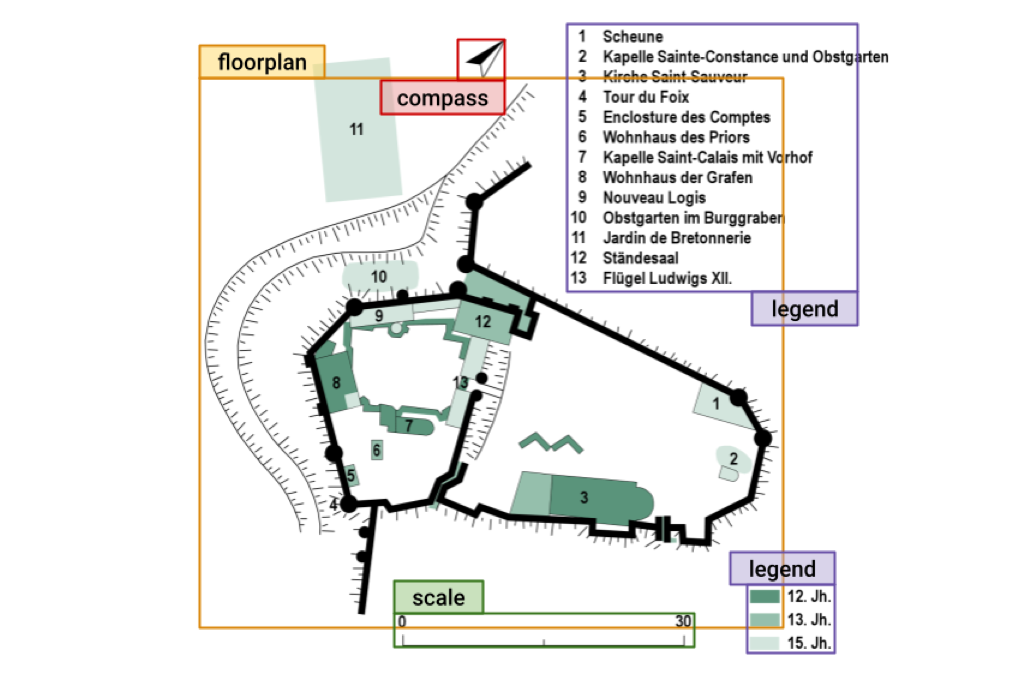

WAFFLE: Multimodal Floorplan Understanding in the Wild

Keren Ganon*, Morris Alper*, Rachel Mikulinsky, Hadar Averbuch-Elor *Equal contribution WACV (Oral), 2025 We introduce WAFFLE, a novel multimodal floorplan understanding dataset of nearly 20K diverse floorplan images and metadata, and we show that this data enables progress on new building understanding tasks. |

|

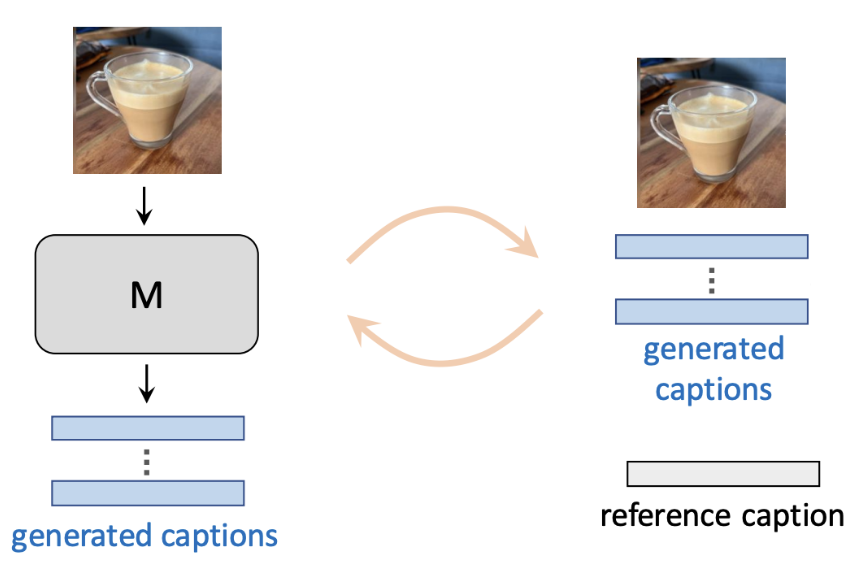

Mitigating Open-Vocabulary Caption Hallucinations

Assaf Ben-Kish, Moran Yanuka, Morris Alper, Raja Giryes, Hadar Averbuch-Elor EMNLP, 2024 We propose a framework for addressing open-vocabulary hallucinations in image captioning models, including a new benchmark and a reinforcement learning-based method to reduce such hallucinations. |

|

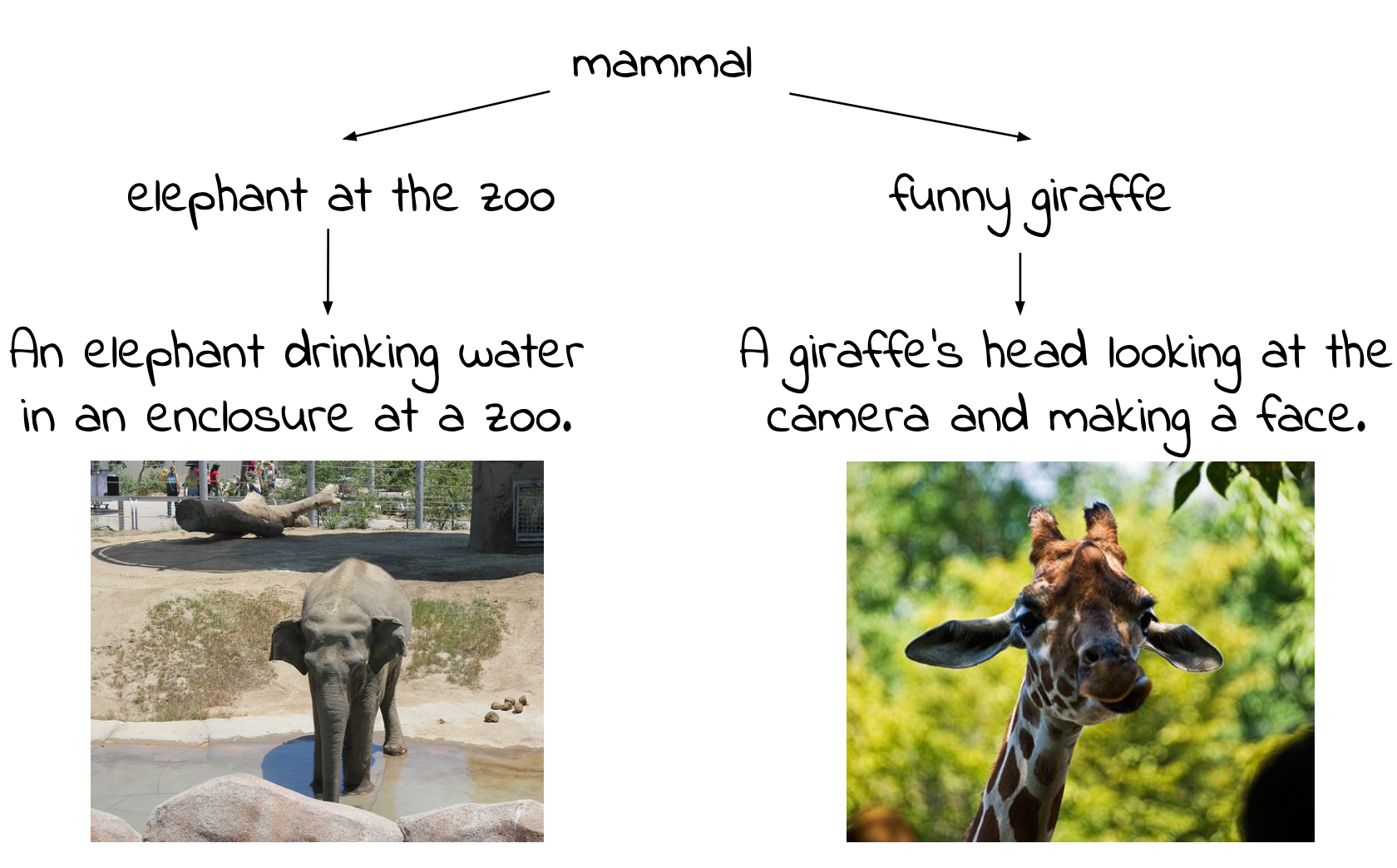

Emergent Visual-Semantic Hierarchies in Image-Text Representations

Morris Alper, Hadar Averbuch-Elor ECCV (Oral), 2024 We show that foundation VLMs like CLIP model visual-semantic hierarchies, proposing the Radial Embedding framework for probing and optimizing this knowledge and the HierarCaps dataset of ground-truth image caption hierarchies. |

|

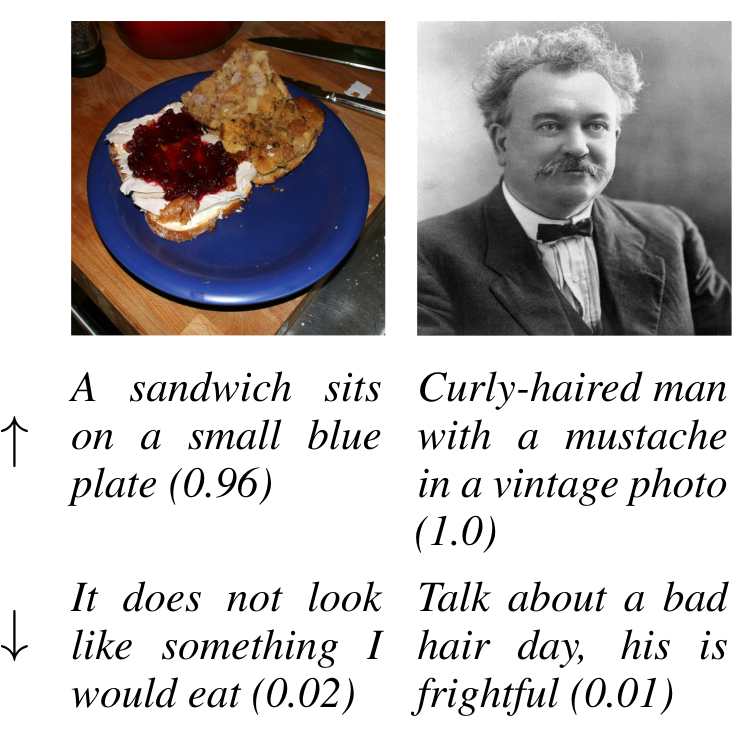

ICC : Quantifying Image Caption Concreteness for Multimodal Dataset Curation

Moran Yanuka, Morris Alper, Hadar Averbuch-Elor, Raja Giryes ACL (Findings), 2024 We quantify image caption concreteness using information loss in foundation vision-language models, and use this score to filter web-scale multimodal datasets. |

|

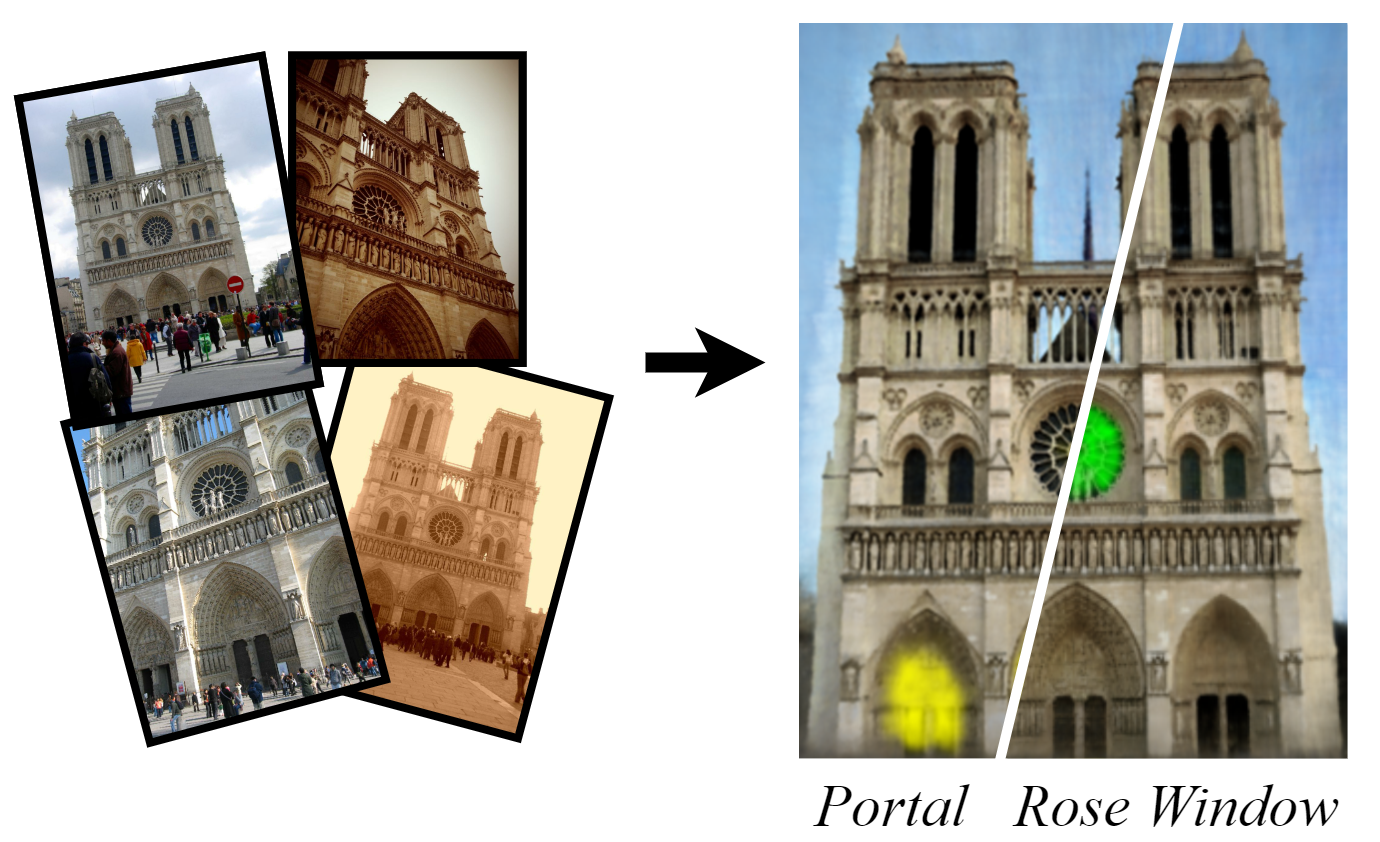

HaLo-NeRF:

Learning Geometry-Guided Semantics for Exploring Unconstrained Photo Collections

Chen Dudai*, Morris Alper*, Hana Bezalel, Rana Hanocka, Itai Lang, Hadar Averbuch-Elor *Equal contribution Eurographics, 2024 We learn a semantic localization field for textual descriptions over collections of in-the-wild images depicting a large-scale scene. |

|

Kiki or Bouba? Sound Symbolism in Vision-and-Language Models

Morris Alper, Hadar Averbuch-Elor NeurIPS (Spotlight), 2023 Presentation from IMVC 2024 By generating images using prompts containing pseudowords (nonsense words) and analyzing their shapes, we show that AI image generation models show sound-shape associations similar to those known from human psychology. |

|

Learning Human-Human Interactions in Images from Weak Textual Supervision

Morris Alper, Hadar Averbuch-Elor ICCV, 2023 We model human-human interaction understanding in images as free text generation, provide a new benchmark and show how to learn this with weak supervision from Internet image captions. |

|

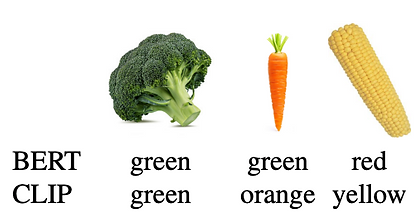

Is BERT Blind? Exploring the Effect of Vision-and-Language Pretraining on Visual Language

Understanding

Morris Alper*, Michael Fiman*, Hadar Averbuch-Elor *Equal contribution CVPR, 2023 We find that multimodally trained text encoders outperform unimodally trained text encoders on visual reasoning in text. |

Miscellanea |

Featured in the News:

|

| Blog Posts and Projects: |

Awards and Honors:

|

|

|